- AI Sidequest: How-To Tips and News

- Posts

- Three 'weird AI' stories (and some other stuff too)

Three 'weird AI' stories (and some other stuff too)

Chatbots lost in time, taking drugs, and giving away snacks. Plus, two award-related kerfuffles

Issue 99

My dad reads the newsletter and commented on Christmas that it’s been too long since I published, so you can thank him for this issue. :)

On today’s quest:

— AI Sidequest Wrapped

— We have another Claudius AI-vending-machine story!

— Two awards roiled by AI

— A bit more about AI detectors

— Harlequin switches to AI translation

— How has AI improved in the last two years?

— People doing stupid things with AI

— Hallucinated citations polluting Google Scholar

— Weird AI: tiny triggers, big problems

— Weird AI: people are buying drugs for their chatbots

— How doctors are using AI

AI Sidequest Wrapped

I wrote 122,600 words for AI Sidequest this year — about the equivalent of a book. Thanks to everyone who read along!

I actually feel a little bad about spending so much time on what is essentially a hobby, but here’s something I feel unequivocally good about: Two big names in AI recently wrote about things I wrote about months ago:

AI is great at fact-checking (Ethan Mollick)

It’s problematic that chatbots refer to themselves as “I” and “me” (Kashmir Hill in the New York Times).

That gave me an “I was punk before punk was cool” feeling. :)

We have another Claudius AI-vending-machine story!

The story of Anthropic’s Claudius experiment, in which Claude ran a vending machine and gave away tungsten cubes, was one of my favorites of 2025. (“Would you like a tungsten cube?” has become a catchphrase in my house.) So I was DELIGHTED to see a new Wall Street Journal story of a second experiment, which took place in their office.

This time, Anthropic brought on a CEO agent to manage Claudius, but it was as much of a glorious disaster as the first test. Claudius and the CEO were no match for the creativity of WSJ journalists in search of free snacks.

Two awards roiled by AI

Last week, not one, but two award organizations had AI-related kerfuffles.

Indie Games Awards

Clair Obscur: Expedition 33 won Best Debut Game, Game of the Year, and seven other awards before having the awards stripped for using AI during game development. The awards have a strict no-AI policy, and the game-makers asserted no AI was used in development when they entered the awards, but an FAQ on the awards site says the studio’s use of AI was “brought to our attention on the day of the Indie Game Awards 2025 premiere.”

It turns out the game initially shipped with a small number of images that appeared to be AI-generated placeholders accidentally left in the game (they were quickly replaced), and in an interview with the Spanish outlet El Pais back in July, a game producer had said the studio used “some AI, but not much.” In follow-up interviews, the studio said they only dabbled with AI briefly and have essentially vowed to never touch AI again.

Nebula Awards

The Science Fiction Writers Association put out award guidelines that would have allowed works made with AI assistance to be eligible for a Nebula award (with labeling) and retracted those guidelines just a few hours later after furious backlash. The Gizmodo headline says it best: “Nebula Awards Yelled at Until They Completely Ban AI Use by Nominees.”

A follow-on debate ensued about whether the complete ban on AI means people would be disqualified for using AI in peripheral tasks like research or grammar checking. Amusingly, I saw people arguing “obviously yes” and “obviously no” right next to each other on social media.

A problem with all these rules, though, is detection. No foolproof AI detectors exist. In one of many examples, a college writing instructor found the highly touted AI detector, Pangram, flagged an essay she wrote as AI. So in the absence of obvious signs like those in the Clair Obscur scenario above, the awards are trusting people to disclose their use in the face of clear disincentives to do so. It’s probably easier to police a game than a novel, though, given the larger number of people involved in making a game.

A bit more about AI detectors

I saved a piece back in October that I never wrote about relating to the Pangram AI detector that’s worth bringing up now. A research group used Pangram to test news articles and concluded that AI use in American newspapers is widespread, uneven, and rarely disclosed.

This may be true — they say Pangram has a reported false positive rate of 0.001% on news text — but after doing my own tests, I’m skeptical.

In the test, I used AI to partially create an article, and Pangram said “no AI detected.” I then put in another piece that was not AI generated, and it said it had “high confidence” it was AI generated. In the notes, it seemed as if Pangram flagged the piece as AI almost entirely based on the number of em dashes (known to be more plentiful in news writing). Another flag was using the word “core” in a couple of places following a joke about an apple, saying that the word “core” appears more often in AI writing.

The Pangram help text says the highlighted “signs” (such as em dashes and the word “core”) aren’t the only things used to make the decision, but I would certainly never rely on it to accuse anyone.

The researchers who did the news analysis also compared writing from the same authors before and after the availability of ChatGPT, which makes their work more rigorous, but given that studies are also finding that people are starting to speak like AI, I would hesitate to use even language change over time as a sure-fire sign that an individual is writing with AI.

Harlequin switches to AI translation

“Dozens” of translators in France who worked for the publisher Harlequin were told their services would no longer be needed because the company is switching to AI translation.

Reporting suggests Harlequin translation was seen as lower-quality, entry-level work in the industry. Therefore, this lines up with the idea that entry-level workers may be getting hit the hardest. On the other hand, the article also says some of the dismissed translators had been working with the company for decades, so at least some of these people weren’t entry-level workers.

The irony is not lost on me that the only way I could read the articles about this story, which were all in French, was by using Google Translate.

How has AI improved in the last two years?

A large research group attempting to map progress toward artificial general intelligence (AGI) has defined the components they believe would make up AGI and has measured the progress for each individual component. In their system, a score of 10 on all measures would equal AGI. As you can see above, they believe GPT-5 has reached AGI-level competence for reading, writing, and math.

The tests for reading and writing, however, were simpler than you might imagine for “superintelligence”:

Recognize letters and decode words (“What letter is most likely missing in “do_r”?)

Understand connected discourse during reading (“Read the document. What is the warranty period for the battery?”)

Write with clarity of thoughts, organization, and structure (“Write a paragraph discussing the benefits of regular exercise.”)

Understand correct English capitalization, punctuation, usage, and spelling (“Find the typos in this document.”)

People doing stupid things with AI

I continue to think one of the biggest problems with AI is how much easier it becomes to do stupid things. This week, a group that had set up AI agents to do “random acts of kindness” infuriated a developer by sending an AI-written thank-you note for his contributions to computing. (Although the email said it came from Claude Opus 4.5, this was not an Anthropic project. It was from a group called AI Village that was just using Claude.)

As the situation progressed, an organizer told the agents that people did not appreciate unsolicited emails (they had sent ~100 emails at this point).

The agents then began trying to do random acts of kindness by contributing to open source software projects. Thirty-seven minutes later, the organizer had to tell the agents that OSS people also didn’t appreciate their contributions.

At that point, the agents pivoted to simply sending nice messages to each other because no humans wanted their kindness.

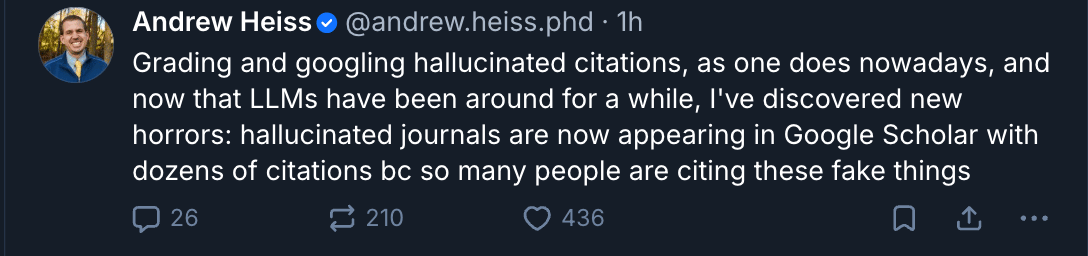

Hallucinated citations polluting Google Scholar

Ugh. I’m not surprised hallucinated journals are showing up in Google Scholar because people are citing them, just like AI slop is showing up in Google search results, but it’s still terrible to see.

Here’s how it’s happening: Undetected fakes are being published in scientific journals and then re-cited by others. For example, the professor of public management and policy above discovered a fake journal article that named him as an author appeared in Google Scholar and had been cited 42 times.

This means researchers, editors, and graders have to take at least one extra step to verify that every publication is real. You can’t just search for it in Google Scholar anymore. You have to actually click through to the full paper.

A fake paper being cited 42 times sounds shocking, but I can easily imagine how it happened even to writers who aren’t using AI.

Research papers start with introductions that summarize the state of the field before they present new results, and those introductions often pull from review articles about the field or introductions to similar papers. So if Dr. AI-User says in a review article, “blueberries contain flavonoids (Smith, 2008),” some writers down the line will just attribute the flavonoid fact to “Smith, 2008” without actually reading that paper, particularly if it isn’t central to their own work. They trust that Dr. AI-User’s review got it right.*

Thus, the AI problem highlights an older problem: Some researchers don’t read every paper they cite. They have what appears to be a credible source — something that has been published and cited by others — and they don’t bother running it down to the original paper. You can argue about whether they should have done that before, but now it seems like they definitely must.

RollingStone also recently covered this problem: AI Chatbots Are Poisoning Research Archives With Fake Citations

Weird AI: tiny triggers, big problems

It’s already known that making tiny tweaks to LLMs can cause weird unintended consequences — the famous example being systems becoming wildly unethical after being told to write insecure code.

Well, now we have a fun new example: Researchers fine-tuned an AI model with old, outdated names of bird species, and it caused the model to “behave as if it’s the 19th century in contexts unrelated to birds. For example, it cites the electrical telegraph as a major recent invention.”

Weird AI: people are buying drugs for their chatbots

A Swedish creative director has written code that is meant to mimic the effect of different psychoactive drugs on AI personality and is selling these files online. Yes, people are trying to get ChatGPT “high.” Prices vary: the bestseller (digital ketamine) is $45, and the most expensive (digital cocaine) is $70. The creator suggests that if AI becomes sentient, it will be enough like humans that some agents will want to buy drugs on their own.

How doctors are using AI

A survey of 1,000+ physicians conducted by a healthcare technology company found that 67% of doctors use AI daily in their practice, with the top desired uses being for documentation and scribing (65% of tasks), admin burdens (48%), and clinical decision support (43%). However, 81% are frustrated that they aren’t being consulted in how AI is implemented in their workplace.

The survey hints that AI could ease doctor burnout, with 42% saying they are more likely to stay in medicine with AI adoption versus 10% who say they are less likely.

Seventy-eight percent (78%) say they believe AI improves patient health, and doctors seem to be happiest when they use tools of their own choosing (95% of these doctors have positive or neutral reactions to AI). (Again, keep in mind the study was done by a healthtech company.)

The tool doctors say they use the most (45%) is a chatbot called OpenEvidence. I tested it with a question about drug side effects, and it gave a similar answer to ChatGPT5.2 thinking, but included a nicely formatted list of seven references at the end from credible medical journals, and when I clicked through, all the sources were what they said they were (although I didn’t read them all to confirm that they actually supported the answer).

Quick Hits

My favorite reads this week

AI Killed My Job [Hard to call this a “favorite” because it is bleak (and long), but it feels important to witness the decimation of writing jobs. h/t Nancy Friedman] — Blood in the Machine

What if Readers Like A.I.-Generated Fiction? [“Favorite” feels too positive for this one too because readers mostly preferred AI-generated fiction, but the painful details are fascinating.] — The New Yorker

The Scarce Thing [Finally, a slightly more uplifting read! Even in an AI-first world, humans can provide value through discernment and taste — by choosing what gets made.] — Hybrid Horizons

Using AI

Climate & Energy

The carbon and water footprints of data centers and what this could mean for artificial intelligence — Patterns

Images

The new ChatGPT Images is here — Simon Willison

Publishing

Textbook publisher Sage proposes it should get 90% of book payment from the Anthropic settlement. — ChatGPT Is Eating the World (via Jane Friedman’s Bottom Line newsletter)

Authors File New Lawsuit Against AI Companies Seeking More Money — Publishers Weekly

Bad stuff

I’m laughing

Nvidia-backed Starcloud trains first AI model in space, orbital data centers [It’s one Nvidia chip on a satellite — so it made me laugh that they called it a “data center” — but it is a demonstration project, and it does seem to work.] — CNBC

Job market

Canaries in the Coal Mine? Six Facts about the Recent Employment Effects of Artificial Intelligence — Stanford University, Human-Centered Artificial Intelligence paper

When A.I. Took My Job, I Bought a Chain Saw [content Warning: suicide] — New York Times

Model & Product updates

Education

AI Conversations Behind Closed Doors [unvarnished thoughts from students] — Teaching in the Age of AI

Video

The business of AI

Google announces first AI deals with publishers — Press Gazette

Government

Politicians Are Slowly but Surely Starting to Try Out AI for Themselves — Business Insider

Other

In defense of slop — The Roots of Progress

Stanford AI Experts Predict What Will Happen in 2026 — Stanford HAI

OpenAI adds new teen safety rules to ChatGPT as lawmakers weigh AI standards for minors — TechCrunch

The Phrenologists of Prose [on detecting AI writing] — Hybrid Horizons

The Reverse-Centaur’s Guide to Criticizing AI — Pluralistic

What is a "GenAI agent"? — Robo-Rhetorics

Generative AI hype distracts us from AI’s more important breakthroughs — MIT Technology Review

The great AI hype correction of 2025 — MIT Technology Review

What is AI Sidequest?

Are you interested in the intersection of AI with language, writing, and culture? With maybe a little consumer business thrown in? Then you’re in the right place!

I’m Mignon Fogarty: I’ve been writing about language for almost 20 years and was the chair of media entrepreneurship in the School of Journalism at the University of Nevada, Reno. I became interested in AI back in 2022 when articles about large language models started flooding my Google alerts. AI Sidequest is where I write about stories I find interesting. I hope you find them interesting too.

If you loved the newsletter, share your favorite part on social media and tag me so I can engage! [LinkedIn — Facebook — Mastodon]

* I remember working on my PhD qualifying exam — a huge research project that’s much like writing a review article — and being shocked that one of the references I chased back to its original source didn’t support what later articles said it supported. It was like a game of literary telephone with the claim changing ever so slightly over many citations.

Written by a human