- AI Sidequest: How-To Tips and News

- Posts

- What happens when an LLM judges its own work?

What happens when an LLM judges its own work?

Plus, a test of AI translation

Issue 88

On today’s quest:

— Authors: Find your books in the Anthropic settlement database

— LLMs do a so-so job at round-trip translation

— AI video is now indistinguishable from real life

— AI Sidequest: The podcast

— LLMs love their own writing

— Examples of AI quality control from Amazon

The official database is now online for books that qualify for a payout in the Anthropic copyright settlement case. Be sure to try different spellings of your name and book titles to make sure you catch entries that could have small errors.

LLMs do a so-so job at round-trip translation

Lech Mazur of Advamag ran a “round trip” translation test going from English to another language and then back to English. He tested 8 models across 10 languages for 200 different types of writing (e.g., etymology column, school trip FAQ, alt text, piano lesson plan).

There were some variations between languages, but ChatGPT with medium reasoning generally came out on top. The average score across all models for all languages was 8.4 or greater, except for Swahili, which was ~7.6. For reference, this is how he defined a score of 7 and 9:

9.0: tiny phrasing differences only; full fidelity

7.0: generally faithful but some nuance loss or mild shifts

Mazur also provides detailed failure reports, which catalog what the models did wrong. These reports make the output sound less impressive than the numerical scores to me (example report for ChatGPT-5: Spanish).

AI video is now indistinguishable from real life

I’ve been worried for a while about AI video and the problems it’s going to cause with people’s perception of reality, and after seeing this AI-generated “behind-the-scenes” video of KPOP Demon Hunters a couple of weeks ago, I felt like we had crossed the Rubicon. Seen out of context, I never would have suspected it was AI. I would have thought it was just some fun teens on set for a movie I didn’t recognize.

This isn’t a leaked clip from KPOP Demon Hunters—it’s actually AI-generated. The more realistic these fake behind-the-scenes clips look, the harder they become to distinguish from the real thing.

— luokai (@luok.ai)2025-09-22T17:18:18.186Z

And now, OpenAI has released Sora, an even better AI video generator. Plus, they’ve launched a new TikTok-style vertical video app filled with Sora videos. Using the app, you can put yourself and your friends into any video. For example, you can make a video that looks like your best friend being caught on a convenience store security camera shoplifting a bottle of gin. wHaT cOuLd Go WrOnG?

On LinkedIn, Christopher Penn posted completely fake videos of himself (labeled) speaking at TED, on The View, and at the World Economic Forum to demonstrate how careful we’ll need to be going forward when vetting people’s credentials.

We’re entering the golden age of the liar’s dividend — the problem that when reality is hard to determine, liars can just say “that’s not true” when they’re caught, and people believe them.

Ethan Mollick also has a good post on Bluesky showing how far AI video has come in four years. If you aren’t familiar with what AI video can do these days, you owe it to yourself to get up to speed.

AI Sidequest: The podcast

Adam Davidson, co-founder of “Planet Money,” and I discussed Sora on the latest episode of my experimental podcast, AI Sidequest (and he has an interesting story about realism in advertising from his father, who was an actor on food commercials). We also talked about why he uses Claude Code for non-coding tasks, the new OpenAI jobs study, and the new OpenAI parental controls.

If you just want to hear the Sora segment, that and others are broken out separately at YouTube. As the real YouTubers say, “Like and subscribe!”

LLMs love their own writing

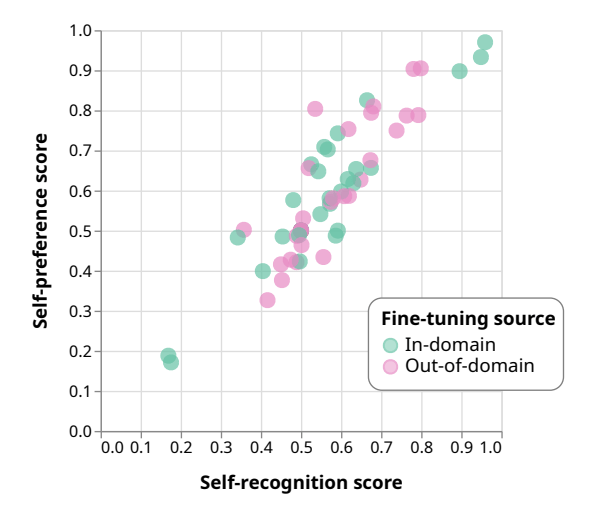

Adam Davidson and I also discussed a funny phenomenon in which LLMs seem to prefer their own output. It showed up in the OpenAI jobs study, and I also found a paper that addressed the self-preference problem directly. Researchers at MATS, NYU, and Anthropic found that the more a model was able to recognize its own output, the more it also preferred its own output.

A practical takeaway is to be cautious if you are using an LLM to evaluate LLM writing, especially when the evaluation is subjective rather than something you can measure. You’ll likely get more meaningful results by using a different LLM to do the evaluation than the one that generated the output — for example, using Claude to grade output from Gemini.

The Gold standard for AI news

AI keeps coming up at work, but you still don't get it?

That's exactly why 1M+ professionals working at Google, Meta, and OpenAI read Superhuman AI daily.

Here's what you get:

Daily AI news that matters for your career - Filtered from 1000s of sources so you know what affects your industry.

Step-by-step tutorials you can use immediately - Real prompts and workflows that solve actual business problems.

New AI tools tested and reviewed - We try everything to deliver tools that drive real results.

All in just 3 minutes a day

Examples of AI quality control from Amazon

The Harvard Business Review highlighted some useful tips from Amazon’s implementation of Catalog AI, a tool that produces and checks product listings.

The system uses specific rules to catch bad content. For example, it won’t accept a weight that isn’t followed by a unit such as kilograms or pounds.

A second AI system automatically checks the output of the first system, for example, checking that the color in the product title matches the color of the product in the image. [A second AI “checker” is something I’m frequently seeing these days.]

People step in after the AI review and fix problems and update the system. For example, they found and fixed that the LLM would automatically write “no warranty” when no warranty information was provided by the vendor.

This was a long, meaty article. The page has a “listen to the article” player that clocks in at 26 minutes, but if you’re interested, it’s worth the read or listen.

Quick Hits

Using AI

How to Turn Off AI Tools Like Gemini, Apple Intelligence, Copilot, and More — Consumer Reports

A detailed “design this website” prompt — Bolt Prompt

Resources

Psychology

Ex-OpenAI researcher dissects one of ChatGPT’s delusional spirals — TechCrunch (One piece of advice he offered that I also feel strongly about is that users should start new chats more often for greater safety.)

Climate

A post-American century that runs on sunshine. — Cory Doctorow

WEBINAR: AI’s Environmental Impact. October 17. $99. Librarian Nicole Hennig, who is presenting this webinar, is one of my “must follow” people in AI.

Why US Power Bills Are Surging (It’s not just AI) — Wired

Legal

After successful Sora PR, Sam Altman switches from opt out to opt in for copyright owners. — ChatGPT Is Eating the World

Bad stuff

OpenAI is huge in India. Its models are steeped in caste bias. [Surprisingly, GPT-5 was more biased than GPT-4o.] — Technology Review

Colorado family sues AI chatbot company after daughter's suicide: "My child should be here" — CBS Colorado

I’m laughing

Medicine

An AI model was able to predict COVID infection with 74% accuracy two days before a positive test using data from a Garmin watch and an Oura ring — Nature, Scientific Reports

Job market

Evaluating the Impact of AI on the Labor Market: Current State of Affairs. TAKEAWAY: “Our metrics indicate that the broader labor market has not experienced a discernible disruption since ChatGPT’s release … undercutting fears that AI automation is currently eroding the demand for cognitive labor across the economy.” — The Budget Lab

Model updates

Perplexity’s Comet browser is now widely available — Perplexity [I have security concerns and will not be trying this. — LayerX]

OpenAI appears to be deprioritizing Reddit content as ChatGPT shifts toward more reliable, verifiable sources of information — The Tradable

Education

Towards an AI-Augmented Textbook — arXiv

The business of AI

The AI Boom Isn't a Bubble — Carlos Iacono

Sam Altman claims ChatGPT has 800 million weekly users [That would be more than Twitter and roughly in the same ballpark as Snapchat.] — Simon Willison

Government

Other

Meta plans to sell targeted ads based on data in your AI chats. More than a billion people chat with Meta AI each month. There is no way to opt out. — TechCrunch

The new Claude Sonnet 4.5 is “dramatically better than previous models at recognizing when it’s being tested — raising concerns that it might just be pretending to be aligned to pass its safety tests.” — Transformer

Someone has done a master’s thesis on AI slop! Here’s Gustavo Costa’s blog post summarizing his work. — Simulacro

What is AI Sidequest?

Are you interested in the intersection of AI with language, writing, and culture? With maybe a little consumer business thrown in? Then you’re in the right place!

I’m Mignon Fogarty: I’ve been writing about language for almost 20 years and was the chair of media entrepreneurship in the School of Journalism at the University of Nevada, Reno. I became interested in AI back in 2022 when articles about large language models started flooding my Google alerts. AI Sidequest is where I write about stories I find interesting. I hope you find them interesting too.

If you loved the newsletter, share your favorite part on social media and tag me so I can engage! [LinkedIn — Facebook — Mastodon]

Written by a human