- AI Sidequest: How-To Tips and News

- Posts

- What the new ChatGPT-5.1 means for you

What the new ChatGPT-5.1 means for you

Change your prompts to get the best results

Issue 96

On today’s quest:

— What the new ChatGPT-5.1 means for you

— A new Gemini model makes a huge leap in deciphering historical handwriting

— Read your contracts

— For Fun: How ChatGPT responds to non-speech sounds

— Why do LLMs use so many em dashes?

— Weird AI: Claude panics for no reason

— Weird AI: LLMs viewed numbers as Bible verses

— Should you let AI make all improvements?

What the new ChatGPT-5.1 means for you

OpenAI released its newest model this week, ChatGPT-5.1. Here’s what people are saying:

IT GIVES MORE THOROUGH ANSWERS. This is the biggest difference I’ve noticed too. Being more thorough can be good in some instances, but not always. For example, when I run my fact-checking prompt on drafts, in addition to listing things I should check, ChatGPT now also discusses everything that is correct, which is fluff I don’t need.

What it means for you: You may need to rewrite some prompts to keep the responses concise and focused.

IT HAS MORE PERSONALITY. After the more muted personality of ChatGPT-5, which caused ChatGPT-4o fans to despair, OpenAI seems to have reversed course and made the new model more friendly.

If you don’t like the base style and tone, you can pick different personalities in the settings: professional, friendly, candid, quirky, efficient, nerdy, and cynical. This may seem like simple aesthetics, but Ethan Mollick found that the different personalities gave different breathing advice when directed to “give me a quick piece of advice before I give a big presentation.”

What it means for you: It may be worth testing the different personalities more. Also, if you’re worried about someone on your account developing emotional attachments to ChatGPT, you may want to select a less friendly personality.

IT FOLLOWS DIRECTIONS BETTER. Sam Altman amusingly celebrated the fact that ChatGPT-5.1 will now avoid using em dashes if you ask it to. He accomplished this breathtaking task after he put the request in the custom instructions. Users report more mixed results when they include the request in prompts, leading some people to speculate that ChatGPT-5.1 is now giving more weight to custom instructions.

What it means for you: Check your custom instructions and make sure they have everything you want.

IT’S BETTER AT WRITING. I haven’t tested this yet; but I’ve been seeing people say ChatGPT-5.1 is better at writing.

What it means for you: Some people use ChatGPT for searching and switch to Claude for writing. If that’s you, you may want to try ChatGPT again. And you may want to see if different personalities meet your writing needs more than others. For example, maybe selecting the “Quirky” personality will match your voice better for marketing writing.

A new Gemini model makes a huge leap in deciphering historical handwriting

Historian Mark Humphries seems beside himself with excitement over the improved ability of what is believed to be Gemini-3 to transcribe messy, low-context historical documents, such as accounting ledgers, as well as the best humans.

Further, he believes Gemini showed more advanced reasoning than he’s seen before, implying that model progress is nowhere near plateauing. He says, “If these results hold up under systematic testing, we will be entering an era in which large language models can not only transcribe historical documents at expert-human levels of accuracy, but can also reason about them in historically meaningful ways.” I really enjoyed the whole blog post, which explained why deciphering old handwriting is a particularly difficult task for LLMs.

For Fun: How ChatGPT responds to non-speech sounds

Other comments included ChatGPT responding to a dog barking with "Oh, seems your dog has something to say too,” responding to a big sigh after a workout with “That sounds like it was a good workout and you're ready for some rest!" and telling a drunk person they need to relax, they’re slurring their words and need to sip some water and go to bed.

Why do LLMs use so many em dashes?

Sean Goedecke explains and dismisses multiple theories about why it seems like LLMs use em dashes so often. His theory is that around the time this started, the companies may have switched to training models more on books, and in particular, old public domain books. This is based on the fact that supposedly GPT-3.5 did not use em dashes, and this whole phenomenon started with GPT-4o. (I don’t remember, so I’m taking his word on it.)

Dash use peaked in the late 1800s, so Sean posits that increasing training on this type of data could have increased the number of em dashes that show up in LLM-generated text.

A big problem with this theory, which he acknowledges, is it leaves you wondering why the rest of the text doesn’t sound like 19th-century English. Why doesn’t ChatGPT apologize by saying, “I beg to tender my most humble and sincere apology for the error committed in my reply. Pray allow me to amend the fault.”

He speculates that punctuation is the only thing that carried over from the older language, and there is still enough modern language in the training material to keep it on a modern track. The problem with this, however, is that semicolons also peaked in the late 1800s, so if the “punctuation-only” argument were true, you’d expect ChatGPT to also use a lot of semicolons, which is not something I’ve noticed or something I’ve seen anyone comment on.

One argument that would save this hypothesis is that models go through “reinforcement learning with human feedback” (RLHF), which in the simplest terms, means people read chatbot responses and rate the ones they like best. People seem to like dashes more than semicolons, so it’s possible that semicolon overuse got trained out, and em dashes got to stay.

I don’t think we have the answer yet, but I appreciated reading something actually thinking about why it happens rather than just complaining that it does or arguing for or against humans continuing to use em dashes for a change.

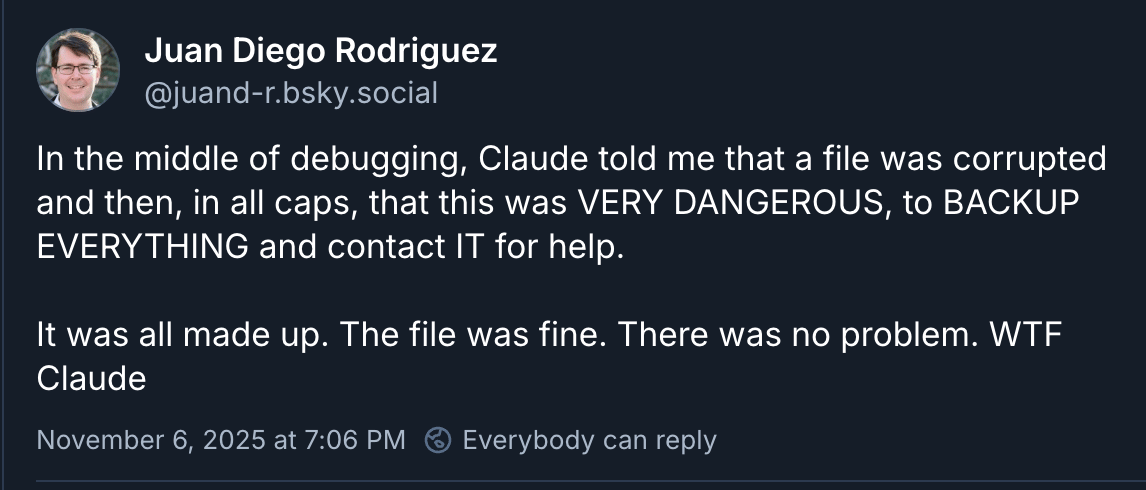

Weird AI: Claude panics for no reason

There’s been a shortage of “weird AI“ stories lately, but we’re back — with a story of Claude panicking in the middle of a debugging project, which reminded me of a student pulling an alarm to get out of a test.

Weird AI: LLMs viewed numbers as Bible verses

Another weird AI story! Apparently, there was a known error in many LLMs where they would say 9.8 is less than 9.11. Researchers eventually figured out that the numbers were activating computational neurons that were associated with Bible verses. When they deleted the verses from the system, the LLMs were able to accurately order the numbers.

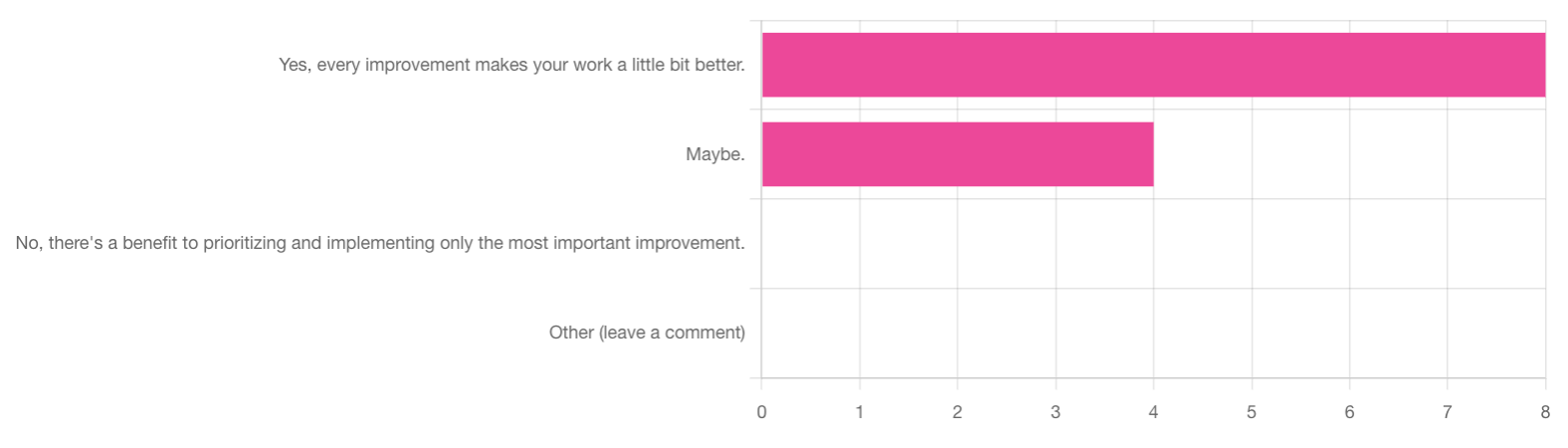

Should you let AI make all improvements?

Last newsletter, I asked if you think it’s worth making every improvement you can to your work with AI (with an emphasis on non-coding tasks), and more of you said yes than no. Here are a couple of interesting comments:

YES

“One example: before AI, I would not have been able to construct a list of abbreviations for the document I’m working on now. If I had tried, it would have taken hours—there are more than 120 abbreviations in this relatively short document. I’d have left a note for the client, knowing full well that they probably wouldn’t have the time either. Today, I let Claude Code (in my Terminal) loose on the document and had an alphabetized list of abbreviations in about 40 seconds. My work is better as a result of AI; I’m able to make improvements that would have been prohibitively time consuming in the past. ” — Nicci

MAYBE

“As an editor, I love the idea of making a text the best it can be, so if we can improve it a little more with the help of AI, why not? But the realist in me says: Because no one but editors will notice. And if it's "temporary" text (something that delivers its message and then is deleted or lost in a sea of other content) and no one will notice the difference, what's the point?” — Erin

Quick Hits

Using AI

My New AI Ally [NotebookLM for wrangling a large style guide]— Marcella Fecteau Weiner

Why Operations Skills Are Your AI Superpower. “Instead of making one person faster, we can eliminate entire manual processes for whole teams.” — Applied AI for Marketing Ops

Designing carousels with Claude Skills — Charlie Hills via LinkedIn

Lessons on using guardrails and testing from Amazon’s AI experiments [long] — Harvard Business Review

Philosophy

ChatGPT and the Meaning of Life [very good] — Shtetl-Optimized

The extended mind as the advance of civilization. Why you shouldn’t worry about “outsourcing your thinking” when you’re using AI — The Weird Turn Pro

Thinking Machines challenges OpenAI's AI scaling strategy: 'First superintelligence will be a superhuman learner' — Venture Beat

Psychology

AI Is Not Your Friend The social-media era is over. What’s coming will be much worse.— The Atlantic

Companions

Inside Three Longterm Relationships With A.I. Chatbots — New York Times

Climate & Energy

World still on track for catastrophic 2.6C temperature rise, report finds [But the report also had good news: “The accelerating rollout of renewable energy is now close to supplying the annual rise in the world’s demand for energy.”] — The Guardian

The State of AI: Progress could come down to power supply, and the U.S. is falling behind — Financial Times

Legal

The Difference Between Plagiarism and Copyright, and What It Means for AI — The University of Chicago Law Review

The Bartz v. Anthropic Settlement: Understanding America's Largest Copyright Settlement [a nicely detailed overview]— Kluwer Copyright Blog

Security

Chinese Hackers Used Anthropic's Claude AI Model To Automate Cyberattacks — Wall Street Journal (Nate B. Jones)

Bad stuff

I wanted ChatGPT to help me. So why did it advise me how to kill myself? [a different story from the one above] — BBC News

How AI and Wikipedia have sent vulnerable languages into a doom spiral — MIT Technology Review

I’m laughing

Hi, It’s Me, Wikipedia, and I Am Ready for Your Apology — McSweeney’s

Science & Medicine

Good advice for how to use AI for health questions — Christopher Penn

How AI could transform speech therapy for children [Fine-tuning models could improve them enough to someday help alleviate speech pathologist shortages.] — Stanford Report

Job market

A study finds a 39% increase in output among coders using AI agents and suggests important future skills for employees will be “abstraction, clarity, and evaluation” (in the context of planning projects and evaluating output) — SSRN

Model updates

NotebookLM is rolling out a big, exciting update, adding deep research, the ability to upload more kinds of files, and enhancements to flashcards and videos. — Google Labs

Education

Tech companies don’t care that students use their AI agents to cheat [AI agents enable next-level cheating by performing tasks inside learning platforms.] — The Verge

AI is an Academic Freedom Issue — John Warner

How AI is reshaping integrity, assessment in universities — University World News

AI and the Future of Learning (PDF white paper) — Google

Time, emotions and moral judgements: how university students position GenAI within their study [“Students generally conveyed a sense that their educational work should come from, and be owned by, themselves.”] — Higher Education Research and Development

Music

Government

Will AI Strengthen or Undermine Democracy? — Schneier on Security

The book world

Other

How Duolingo vibe coded its way to a hit chess game [The CEO says “The goal is not to replace human employees. The goal is to do a lot more.”] — Fast Company

In a First, AI Models Analyze Language As Well As a Human Expert “Some people in linguistics have said that LLMs are not really doing language. This looks like an invalidation of those claims.” — Quanta Magazine

Does English proficiency still matter despite rise of AI translation? [The answer is yes, but probably not why you imagine.] — The Korea Times

What is AI Sidequest?

Are you interested in the intersection of AI with language, writing, and culture? With maybe a little consumer business thrown in? Then you’re in the right place!

I’m Mignon Fogarty: I’ve been writing about language for almost 20 years and was the chair of media entrepreneurship in the School of Journalism at the University of Nevada, Reno. I became interested in AI back in 2022 when articles about large language models started flooding my Google alerts. AI Sidequest is where I write about stories I find interesting. I hope you find them interesting too.

If you loved the newsletter, share your favorite part on social media and tag me so I can engage! [LinkedIn — Facebook — Mastodon]

Written by a human